A Guide to Clinical Trial Endpoints

Steph’s Note: This week, we return with another post from Anthony Hudzik. If you haven’t checked out his Pharmacoeconomics post from earlier this month, you really - REALLY - should! Solid overview of what you should know - both for exams and for daily pharmacist life. In case you missed him, Anthony is a P4 student at the University of Texas at Austin College of Pharmacy. Anthony’s career goals involve market access and HEOR, and he will be doing a fellowship after graduation. Anthony recent had a 6 week APPE rotation with Brandon and had the time of his life (seriously, it’s the best. Brandon didn’t make me say this *nervous laugh*). Outside of pharmacy, Anthony is a world class rock climber (and by world class, I mean average) and has traveled to every country in the world except for 185 of them.

Throughout pharmacy school, you will absolutely do at least one journal club reviewing a clinical study about a new drug. When reading the studies, you will come to the methods section, in which the primary and secondary endpoints are defined.

^^Endpoints talking to all the popular journal club topics like stats and randomization strategies. (Image)

But what exactly are these endpoints?

To keep it simple, an endpoint is a measurable outcome used to determine whether the drug under investigation is beneficial.

Drug companies when their new drug isn’t more effective than placebo. (Image)

How well a drug performs according to these endpoints can be the difference between making millions of dollars or wasting years of research and money. So, these endpoints are a pretty big deal.

Big pharma has no shortage of ways to show off how amazing their hot new drugs are. So it’s really up to you as a clinician to determine if these new drugs are as good as the claims make them out to be. Don’t fall for the hype of a drug when it doesn’t actually offer any benefits compared with drugs already on the market.

Basically, don’t be a sucker for the glitzy new hot thangs on the pharmacy market - at least not without making your own determination that they’re worthy of falling for. Remember what your momma taught you.

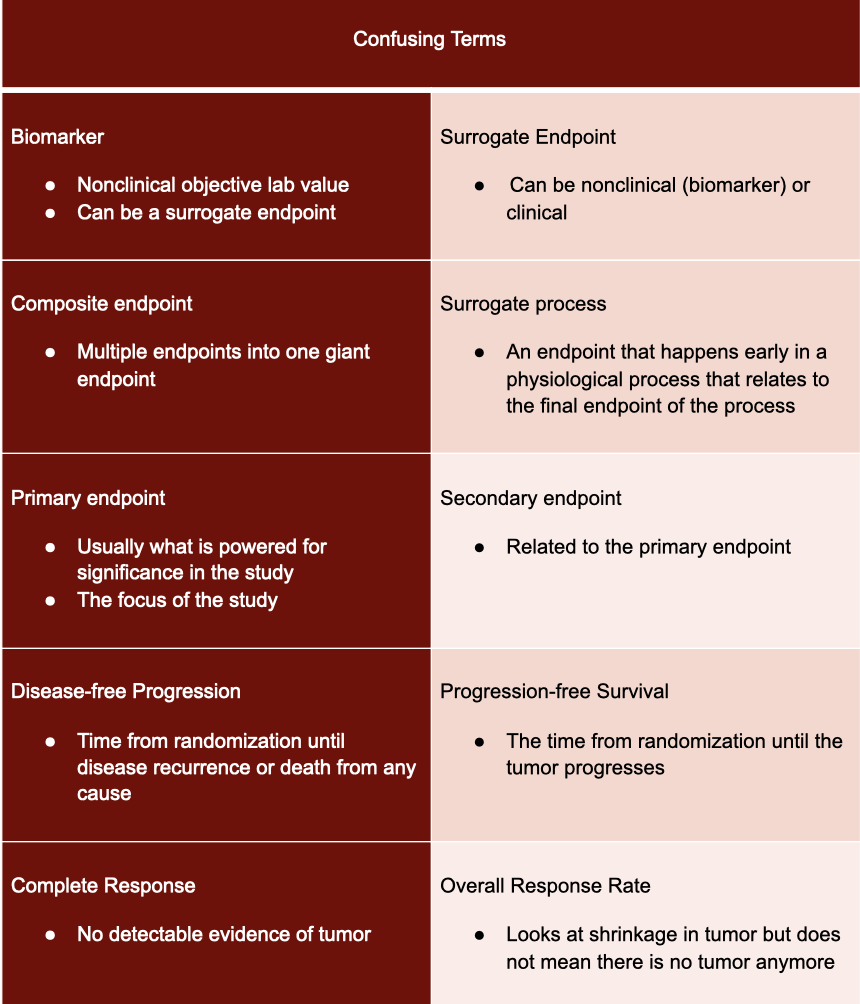

Primary Versus Secondary Endpoints

In a single study, investigators look at multiple endpoints. Some are more important than others, and some deserve extra attention. The ones we’re talking about here are the primary endpoint and the secondary endpoints.

The primary endpoint is usually what is powered for significance in the study. When looking at a primary endpoint, it is very important to determine if that primary endpoint matches the objective of the study.

For example, in cardiology, troponin is a molecule that is measured to see if the heart is damaged or if a heart attack has occurred. So let’s say a study is hoping to show that a new drug reduces occurrence of strokes, but the primary endpoint is troponin levels. Since troponin is generally associated with heart attacks, it doesn’t really make sense to use it as an endpoint when talking about strokes. After seeing this, you should definitely have your BS radar on high alert and take whatever the authors conclude with a grain (or maybe a boulder, in this case) of salt.

Now let’s say instead that the primary objective was looking at reducing cholesterol, and the primary endpoint was LDL levels. That makes sense, right? Directly correlated. Happy day, you know you are dealing with an appropriate primary endpoint.

You to study investigators when they choose legit and useful endpoints. (Image)

On the other hand, secondary endpoints usually look at things related to the primary endpoint. For instance, if a company is designing a blood pressure medication, then the primary endpoint could be blood pressure. The secondary endpoint could then be something like quality of life or adherence, etc.

As mentioned above, these secondary endpoints don’t usually go through the statistical ringer. So if a study has a secondary endpoint of stroke occurrence and they conclude the drug reduces stroke occurrence, maybe don’t fully accept this at face value. While this could be true, that does not mean it is true - and it’s important to make that distinction.

In order to do this, you should at least check to see if the secondary endpoint was statistically powered to detect a difference from the comparator or to see if the result was statistically significant. These types of things are clues to how important that secondary endpoint is.

Are you tired of secondary endpoints yet? There are still some other things to consider…

If a secondary endpoint was part of the initial design before the study was actually conducted, it is called pre hoc. A secondary endpoint can also be created after the study is done, and this type is called post hoc.

So now you may be wondering why it matters when the endpoint was created. Well let’s say you are the one in charge of proving a drug works. All the endpoints you established when initially designing the study didn’t improve because of the drug. But you don’t want to publish a study that shows negative results...cause that’s lame. So you comb back through the data for ANYTHING that was improved for subjects receiving your drug, and woot, you find something! You create a post hoc endpoint, include that in the study, and call it a day.

As you can see, creating an endpoint after looking at final data can lead to bias. A researcher can easily look at the data and find some positives to help hide the negatives. But not all post hoc endpoints are bad!

After all the data is collected, it might come out that a certain population derived the most benefit from the new drug. A new endpoint might be created to showcase this. So in this instance a post hoc endpoint is good.

Well that’s confusing… So are post hoc endpoints good or bad?

Was John McClane actually working for the FDA?? (Image)

Well it's not that easy…even the FDA struggles with it. Look at what’s happening over at Biogen with their new Alzheimer’s drug. Biogen cancelled its study on a new drug called aducanumab because it failed its pre-hoc endpoints and wasn’t better than placebo. BUT after post-hoc analysis, it was determined that the drug actually does work, and they resubmitted to FDA. Now the FDA is trying to sort out all that fun to see what’s true. Stay tuned in the next few months to see what the FDA does with these post-hoc endpoints.

It’s basically the pharmacy equivalent of Die Hard.

So what I’m getting at here is that when looking at post hoc vs pre hoc, just make sure you are looking at the big picture. Pre hoc is harder to manipulate since it is incorporated into the initial study design; therefore, there is a lower chance of bias. If you see post hoc endpoints, make sure you turn on that BS radar and look at ALL endpoints to glean the full story of the drug.

Clinical Versus Nonclinical Endpoints

To further complicate this mess, endpoints can either be clinical or non-clinical:

(Image)

Since each drug has a safety risk, there has to be a significant benefit to taking it. This is where clinical endpoints come in.

Clinical endpoints relate to how a person feels, functions, or survives. Think things like improved survival, symptom control, or reduced risk. As you can see, these endpoints don’t necessarily have to be objective. They can be subjective as well, like having patients rate their symptomatic improvement.

Non-clinical endpoints are objective measurements that don’t indicate how a person feels, functions, or survives. Often biomarkers are lumped into this category as well. Biomarkers are objective and easily measurable. Some examples are eosinophil, troponin, and bilirubin levels.

Another example is troponin, which is not usually present in the serum. When heart muscle is damaged (like in a myocardial infarction), troponin leaks out of cardiac cells. Troponin then gets into the bloodstream, where it can be measured and used as an indicator of heart damage. Knowing your pathophysiology and lab tests are SO important for determining the significance of a biomarker!

It’s also important that a study states the interpretation of the biomarkers. One biomarker could have several different meanings, so it's important to be on the same page as the study authors. For example, the APOE-e4 allele is strongly associated with Alzheimer’s disease. This allele could either be interpreted as having Alzheimer’s or a chance of having Alzheimer’s. Those are two very different things…

Biomarkers can also be used in different ways to help understand how a drug affects a patient. Some different types of biomarkers include ones that evaluate susceptibility/risk, those for diagnostic, monitoring, prognostic, or predictive purposes, and those that identify pharmacodynamic/medication responses or safety.

So when a biomarker is used as an endpoint, it’s imperative to be sure you are interpreting it how the study intended it to be interpreted.

Biomarkers are so important that the FDA even has a list of approved biomarkers that it recognizes:

#masks (Image)

It’s important to note that just because a biomarker didn’t make the FDA’s Who’s Who list doesn’t mean that it’s not an appropriate or important biomarker! If you read a study that has a biomarker that’s not on this list, don’t immediately throw the study in the trash. Use your clinical judgement and see if you think it’s a good biomarker! (We’ll talk about how to do this in a little bit.)

To continue with #allthefun that is biomarkers, you have to keep in mind that things change. Remember those days just a mere year ago when it was acceptable to walk around without a mask? Try that today, and you’re probably (understandably) going to get some Looks.

Same case with biomarkers. Sometimes a once-accepted biomarker means nothing a few years later. For instance, over the years, the use of oligoclonal bands in the CSF has fallen in and out of diagnostic criteria for multiple sclerosis (it’s currently in with the latest 2017 McDonald criteria, FYI).

So the point here is that biomarkers have advantages and disadvantages:

Surrogate endpoints

At the end of the day, sometimes it is either impossible to measure a proper endpoint, or it would take too long (aka, it would cost too much money). For instance, measuring overall survival for a chronic disease like hypertension could take decades to assess. And the years that the study is ongoing means minimal access for patients to a potentially impactful medication. So, for the sake of ruling drug hopefuls in or out more quickly, surrogate endpoints are often utilized. It’s beneficial to both the patient and drug company to get a helpful drug approved as fast as possible (can you imagine the cost of running a decades-long trial?!?).

Clinical trials are often designed using an endpoint that is faster and easier to measure than perhaps the one we really care about. For example, studies may focus on changes in blood pressure as an endpoint, but then they’ll use this endpoint to correlate with impact on stroke risk in the long term. Let’s looks at this in a non-pharmacy way.

Say you are training for a marathon. If your training regimen helps you to run one mile in a faster time, in theory you will run the entire marathon faster. That one mile time that you’re using to track your training is a surrogate endpoint for how you expect to perform in the big marathon.

Whenever dealing with surrogate endpoints, it’s important to not just accept that they are valid. You have to use that clinical judgement of yours to make sure the connection they are trying to make actually makes sense.

Look at what happened to niacin to see how important it is to critique surrogate endpoints. In studies of niacin, surrogate endpoints of HDL levels improved, so the researchers concluded that niacin reduced cardiovascular events. Then, the FDA approved niacin, and everyone went on about their day. But, spoiler alert, then more studies were released that found that niacin actually increased mortality. Whoops! Perhaps that leap from increasing HDL levels to reducing cardiovascular events wasn’t fully fleshed out…

So how do you decide what is a good surrogate endpoint? The surrogate endpoint should be in the causal path of the true endpoint.

Tying this back to pharmacy now. LDL is a great surrogate endpoint for assessing risk of a myocardial infarction because high LDL levels lead to atherosclerotic plaque formation. Plaque in turn leads to myocardial infarction and cardiovascular death. So LDL levels are appropriate surrogate endpoints for myocardial infarctions.

NOTE: This is why it’s SO important to know the pathophysiology of diseases! If you don’t have this background, you can’t really determine if a surrogate endpoint is appropriate.

It’s also important to assess whether the connection between the surrogate endpoint and outcome of interest is just an association or whether there’s true causation. If there’s just an association (like higher HDL levels being associated with reduced cardiovascular events…), that doesn’t mean the studied intervention will lead to a good end outcome - and that’s a bad surrogate endpoint.

Now you may be wondering what’s the difference between a biomarker and surrogate endpoint. As seen by the LDL example, a biomarker can be a surrogate endpoint (when used correctly). But in general, surrogate endpoints can be both clinical and non clinical (biomarkers).

Composite Endpoints

Drug companies sometimes use composite endpoints to improve their chances of successful drug approval. A composite endpoint is exactly what it sounds like: a bunch of individual endpoints combined into one umbrella endpoint. For instance, if a study investigates a drug for the prevention of vascular ischemic events, it might combine rates of MI, stroke, death, and re-hospitalizations to form a composite endpoint.

It’s important to assess composite endpoints (just like any other endpoint), but the process to evaluate composite endpoints is different compared to evaluating surrogate endpoints. For composite endpoints, it’s useful to pick apart the components of the composite, whereas for surrogate endpoints, you evaluate the causal flow to determine legitimacy of the surrogate endpoint.

(Image)

It’s very possible for one composite endpoint to encompass 4 different variables, commonly the following: cardiovascular death, non-fatal MI, non-fatal stroke, and quality of life. It’s also totally possible for the intervention or drug to be no better than placebo in several (but perhaps not all) of those component endpoints. Maybe there was no difference between drug and placebo in cardiovascular death, non-fatal MI, and non-fatal stroke, BUT the study drug was a LOT better than placebo in quality of life.

This scenario illustrates why it’s so very important that a study provides a statistical breakdown of all the components of the endpoint. If the study doesn’t do this, that should be a HUGE red flag that there could be misleading data. There’s also the problem with a composite endpoint trying to encompass too many disparate pieces to be practical or interpretable (especially if the components aren’t further analyzed).

(Image)

The researchers investigating systemic glucocorticoid use in COPD have been criticized for this exact misstep. They used a composite primary endpoint of “first treatment failure” that included death from any cause, need for intubation, readmission to the hospital for COPD, or intensification of drug therapy. Look closely at those components. There’s a wide range in severity here, from death to increased steroid dose. How can we reasonably interpret this Kaplan Meyer curve for our patients when “treatment failure” could mean anything from addition of another medication to patient death??

The study concluded that glucocorticoids resulted in moderate improvements in clinical outcomes. Now, you may initially think that the steroids reduced deaths. But thanks to your analysis, you notice there was no difference in deaths between groups. The major difference between groups was in the increased steroid dose. As you can see from this example, analysis of the individual components of the composite endpoint is essential to avoid misconceptions about an intervention’s impact.

Just like with secondary endpoints, it’s also important to check at what time a study establishes composite endpoints. There’s still that possibility of investigators picking and choosing endpoints after study completion to frankenstein a statistically significant composite endpoint.

So both composite and surrogate endpoints have advantages and limitations, and it’s important to recognize both.

Oncology Endpoints

Oncology endpoints are a whole different beast (really, oncology is a whole beast in and of itself - hence, there’s a tl;dr cheat sheet for it here). They are not as intuitive as the endpoints of other therapeutic areas.

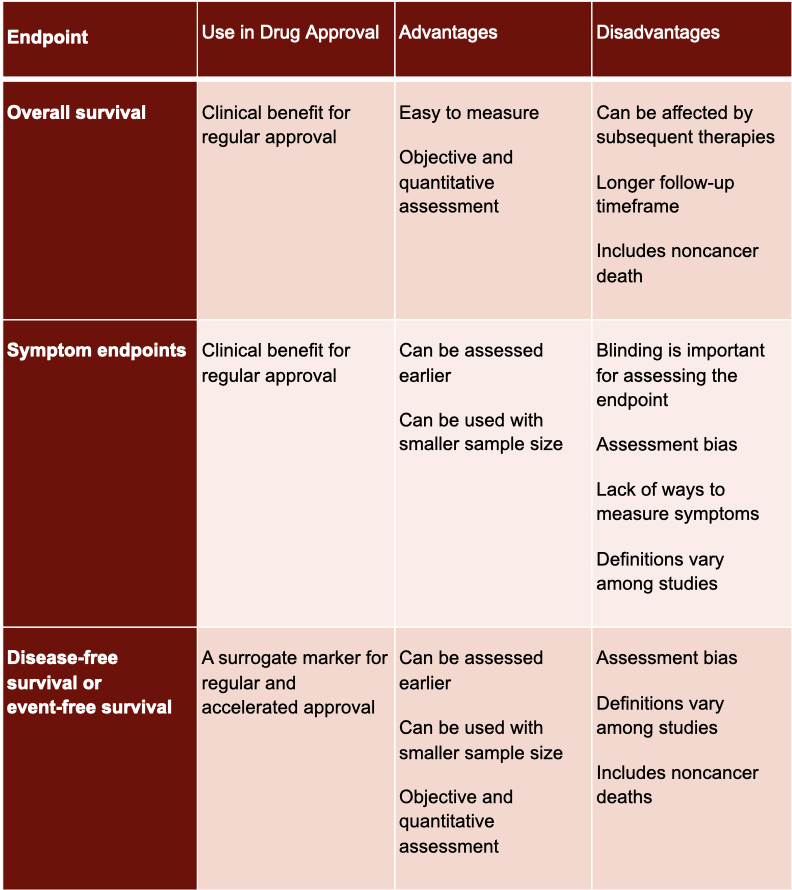

There are six major oncology endpoints:

Overall survival

Symptom endpoints

Disease-free survival / event-free survival

Objective response rate

Complete response

Progression-free survival / time to progression

The word survival is used in three of the endpoints but has a different meaning in all three! So it’s important to really understand the differences.

Overall survival is the easiest of the bunch to understand. It’s simply the time from randomization until death from any cause. While this endpoint is the most reliable cancer endpoint, it generally takes way too much time to study. So what most investigators do instead is study an endpoint that’s both faster for drug approval while also nodding to survival.

Most oncology companies use endpoints based on tumor assessment. Tumor assessments are great as surrogate endpoints and for getting approval quickly from the FDA. Endpoints based on tumor assessments include the following:

Disease-free survival (or event-free survival)

Objective response rate

Complete response

Time to progression / progression-free survival

Disease-free survival is the time from randomization until disease recurrence or death from any cause. This endpoint is most commonly used after surgery, radiotherapy, or when there is a complete response with chemotherapy. Event-free survival is essentially the same thing, but it precludes surgery, local or distant recurrence, or death due to any cause. So it’s important to make sure you know how the endpoint is defined by the study authors.

Objective response rate is the proportion of patients that have a decrease in tumor size. The standard way to gauge this is with the RECIST criteria. RECIST criteria provides a standardized set of rules to assess tumor shrinkage, which helps the analysis to be reproducible and easier to understand.

Complete response is very straightforward and exactly as it sounds. Complete response is when there is no detectable evidence of tumor. This endpoint is primarily used in leukemia, lymphoma, and myeloma.

Time to progression is the time until the tumor progresses…again pretty self-explanatory, if you know it’s related to tumor assessment. Progression-free survival is the exact same as time to progression, but it also includes death in its assessment.

The final type of oncology endpoint for today involves symptom assessment. Cancer can have dramatic effects on patients’ lives, so drugs may also be approved if they improve a patient’s symptoms.

So now you may be thinking why are there so many oncology endpoints, and when should each be used?

Just like non-oncology endpoints, these endpoints should be clinically relevant and beneficial. It should also be effectively measurable, specific, and reproducible. With oncology being a very diverse field, it is easy to see why different endpoints are needed for different types of cancer or lines of therapy.

Overall survival (OS) is the gold standard of oncology endpoints. It is both easy to measure and objective. However, it's not great for all types of cancer. Overall survival isn’t very useful for slowly progressive diseases with long expected survival times, such as hormone receptor-positive breast cancer and low- or moderate-state neuroendocrine tumors. Most of these patients try multiple therapies over time, and it’s tough to determine which intervention is the real reason for the overall survival.

But wait, we just said overall survival is studied in in every cancer. Isn’t that contradictory?

Nope, truly. It’s just that in these specific instances where overall survival may be difficult, other endpoints are just also used to help clarify the picture.

Progression-free survival (PFS) is one of the most common endpoints in oncology trials since it can be assessed earlier, is easy to measure, and is objective. PFS is usually used in locally advanced or metastatic diseases for which curative treatment options usually cannot be implemented.

While disease-free survival (DFS) is like PFS, it is usually used in different studies. DFS is used in adjuvant therapies where patients show no signs of disease following curative treatment.

Finally, objective response rate (ORR) reflects anti-tumor activity. ORR really shines when dealing with targeted therapeutic agents. For instance, ORR for chemotherapy in patients with BRAF-mutant metastatic melanoma is approximately 10%; however, BRAF inhibitors provide an ORR of 50%. Besides targeted therapies, refractory cancer types for which there are no or limited therapeutic options are another scenario for using ORR.

Overall ORR shows if there is a measurable decrease in tumor size. For example, a tumor in the wrong part of someone’s brain can cause seizures. A tumor near the neck or esophagus can close off the windpipe. In these cases, it’s easy to see how decreasing tumor size can be a real benefit for quality of life AND morbidity / mortality.

We went through a lot of terms that sound similar, so here’s an easy table to help you keep things straight:

tl;dr Endpoints

Not all endpoints are created equally. Make sure you use your judgment when reading and evaluating clinical trials.

While overall survival is the most reliable oncologic endpoint, most investigators use tumor response when demonstrating the efficacy of a drug.